Diff-TTSG

Denoising probabilistic integrated speech and gesture synthesis

This project is maintained by shivammehta25

Diff-TTSG: Denoising probabilistic integrated speech and gesture synthesis

Shivam Mehta, Siyang Wang, Simon Alexanderson, Jonas Beskow, Éva Székely, and Gustav Eje Henter

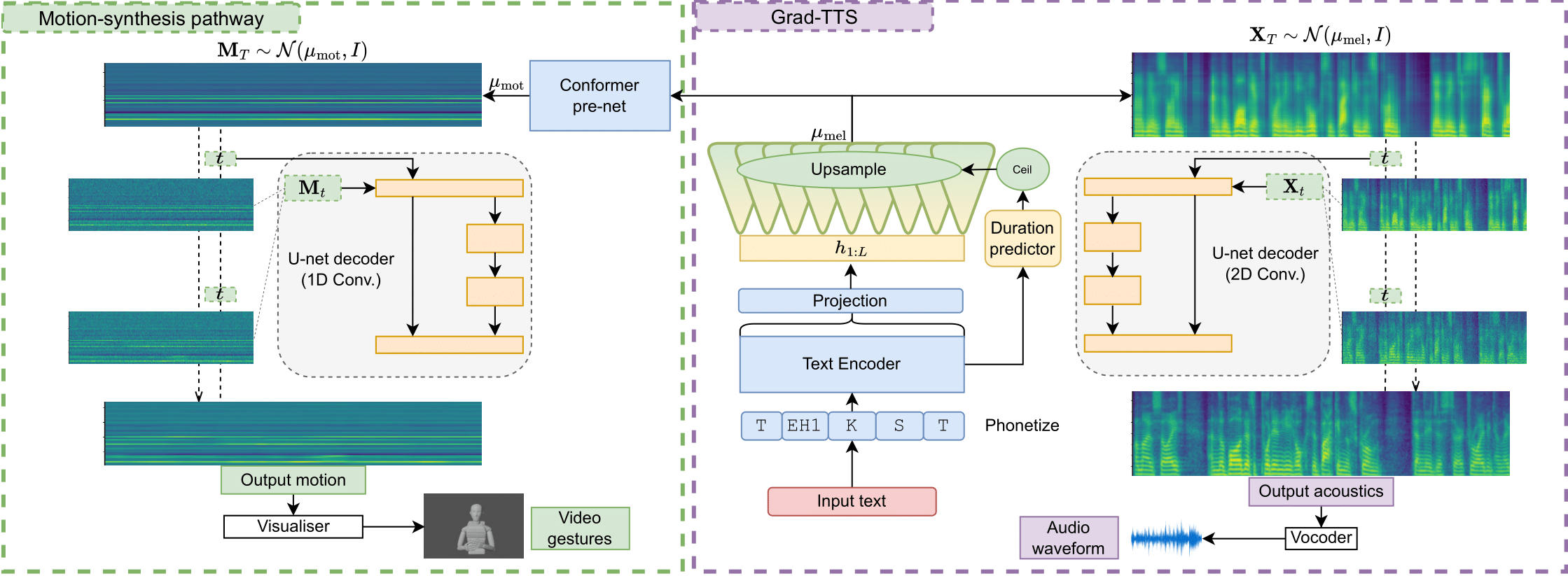

We present Diff-TTSG, the first diffusion model that jointly learns to synthesise speech and gestures together. Our method is probabilistic and non-autoregressive, and can be trained on small datasets from scratch. In addition, to showcase the efficacy of these systems and pave the way for their evaluation, we describe a set of careful uni- and multi-modal subjective tests for evaluating integrated speech and gesture synthesis systems.

For more information, please read our paper at the ISCA Speech Synthesis Workshop (SSW) 2023.

Code

Code available on GitHub: https://github.com/shivammehta25/Diff-TTSG

Also available on HuggingFace spaces: https://huggingface.co/spaces/shivammehta25/Diff-TTSG

Teaser

Architecture

Model output

Beat gestures

Currently loaded: Example 1

And the train stopped, The door opened. I got out first, then Jack Kane got out, Ronan got out, Louise got out.

Positive-negative emotional pairs

| Positive emotion | Negative emotion |

|---|---|

Currently loaded: Example 1

Positive: I went to a comedy show last night, and it was absolutely hilarious. The jokes were fresh and clever, and I laughed so hard my sides hurt.

Negative: I tried meditating to relieve stress, but it just made me feel more anxious. I couldn't stop thinking about all the things I needed to do, and it felt like a waste of time.

Stimuli from the evaluation test

Speech-only evaluation

You walk around Dublin city centre and even if you try and strike up a conversation with somebody it’s impossible because everyone has their headphones in. And again, I would listen to podcasts sometimes with my headphones in walking around the streets.

| NAT | Diff-TTSG | T2-ISG | Grad-TTS |

|---|---|---|---|

And then a few weeks later after that my parents were away my granny was minding us and again I don’t know why I told my brother to do this but I was like here.

| NAT | Diff-TTSG | T2-ISG | Grad-TTS |

|---|---|---|---|

But I remember once my parents were just downstairs in the kitchen and this is when mobile phones just began coming out. So, like my oldest brother and my oldest sister had a mobile phone each I’m pretty sure.

| NAT | Diff-TTSG | T2-ISG | Grad-TTS |

|---|---|---|---|

Eventually got to a point where I was like okay I need to stop doing this sort of stuff Like it just doesn’t make any sense as to why because I was getting hurt like there was times where like, I was like tearing muscles and I never broke a bone which I’m pretty proud of.

| NAT | Diff-TTSG | T2-ISG | Grad-TTS |

|---|---|---|---|

Gesture-only evaluation (no audio)

Currently loaded: Diff-TTSG 1

If you like touched it, it was excruciatingly sore. And I went up to the teachers I was like look I'm after like really damaging my finger I might have to go to the doctors.

| Text prompt # | NAT | Diff-TTSG | T2-ISG | [Grad-TTS]+M |

|---|---|---|---|---|

| 1 | ||||

| 2 | ||||

| 3 | ||||

| 4 |

Speech-and-gesture evaluation

| Matched | Mismatched |

|---|---|

*Note: Matched versus mismatched stimuli were not labelled in the study and presented in random order.

Currently loaded: Diff-TTSG 1

Yeah and then obviously there, there's certain choirs that come down to the church. There's a woman called, I can't remember her name. But she has an incredible voice. Like an amazing voice.

| Text prompt # | NAT | Diff-TTSG | T2-ISG |

|---|---|---|---|

| 1 | |||

| 2 | |||

| 3 | |||

| 4 |

Importance of the diffusion model

To illustrate the importance of using diffusion in modelling both speech and motion, these stimuli compare synthesis from condition D-TTSG to synthesis directly from the μ values predicted by the D-TTSG decoder and Conformer.

| μ (before diffusion) | Final output (after diffusion) |

|---|---|

Currently loaded: Example 1

But and again so that doesn't help people like myself and my friend who actually want to strike up a conversation with a genuine person out in the open because we don't want to go online we don't feel like we have to do that.

Citation information

If you use or build on our method or code for your research, please cite our paper:

@inproceedings{mehta2023diff,

author={Mehta, Shivam and Wang, Siyang and Alexanderson, Simon and Beskow, Jonas and Sz{\'e}kely, {\'E}va and Henter, Gustav Eje},

title={Diff-TTSG: Denoising probabilistic integrated speech and gesture synthesis},

year={2023},

booktitle={Proc. ISCA Speech Synthesis Workshop (SSW)},

pages={150--156},

doi={10.21437/SSW.2023-24}

}